To enable audio, please press music icon in bottom right corner of the video

WWise Dynamic Music

This dynamic music project demonstrates smoothly transitioning music linked to AI and gameplay mechanics within unreal engine 5 using states and RTPC's. It also features projectile pass-by sound effects for when bullets narrowly miss the player, utilizing spatialization and attenuation to enhance immersion and provide enhanced auditory feedback. Alongside this, I also implemented a simple heartbeat implementation, manipulating the final output of the audio bus via a low-pass filter and influencing the overall volume through RTPC's.

This was developed as part of a final year audio programming module, focusing on the positive impact of audio programming techniques on player experience. I developed several key systems and components, such as an AI subsystem to track enemies chasing the player globally and a player audio component to manage WWise events, state and RTPC changes to ensure music and sound effects respond seamlessly to gameplay logic. This was primarily implemented in an event driven and modular approach, allowing for components to bind to delegates within different actors and the AI subsystem, improving readability, maintainability and encapsulation.

I was particularly inspired by games such as Project Zomboid to implement dynamic music, as it effectively demonstrates how this technique can be used to increase player tension and excitement within the game world, improving player immersion and leading to a more fun and enjoyable gameplay experience.

Technical Features

Dynamic Music Transitioning

The Dynamic Music implementation within this project reflects whether any enemies are currently chasing the player, resulting in the music transitioning from a peaceful track to a more tense and exciting one. As more enemies notice the player, the main track becomes quieter, while several of the instruments increase in volume. I implemented this dynamic music approach to represent the tension the player feels as more enemies begin putting pressure on them, providing auditory feedback and facilitating a sense of urgency.

There are two types of enemy agents within the world; the turrets and hovertanks, both inheriting from the base class AudioEnemy. This base class is primarily responsible for informing the AudioAIController of when the player is spotted through the use of the AIPerceptionComponent. It also informs the AudioEnemySubsystem, allowing WWise to dynamically respond to enemies chasing the player through an event driven approach by modifying RTPC values and performing state changes. The base class also acts as a container for other components including the EnemyAudioComponent, responsible for triggering WWise events for firing projectiles and exploding on contact with the player.

Both enemy agents register themselves with the AudioEnemySubsystem upon spotting the player. This subsystem tracks how many enemies are engaging the player, triggering events to inform the AudioPlayerComponent, which then updates WWise RTPC's and performs state changes through blueprint implementable events. This system ensures that WWise adapts dynamically to changing gameplay conditions, allowing modifications to the current track alongside changes in which instruments are playing.

I implemented dynamic music transitioning within WWise through a series of states, music containers and a switch container. The tracks themselves are stored inside a series of music segments that represent the underlying chase or hidden music, alongside two instrument tracks. These segments are grouped into music containers, which are then organized within a switch container. This switch container selects which track to play based on the PlayerChasedStateGroup state, enabling the music to respond dynamically to gameplay, reflecting the player's precarious situation.

I also implemented a slight delay to swapping between the tracks in blueprints, ensuring that the transition feels smooth. Without this, rapid changes between the tracks would result in a jarring gameplay experience, ruining player immersion.

Overall, this system works as intended, enhancing the player experience and allowing for an increase in tension and excitement for the player. It appropriately reflects the situation the player is in, increasing immersion and effectively linking the music to gameplay mechanics. However, reflecting on how I implemented the delay, I believe a crossfade between the two tracks would have been more appropriate, which could have resulted in a smoother transitioning experience. I also could have simply called the SetState node in blueprints, instead of calling a custom WWise event, cutting down on the number of events utilized resulting in more efficient code.

Screenshot of TargetPerceptionUpdated method registering with the AudioEnemySubsystem upon spotting the player

Screenshot of PlayerAudioComponent binding blueprint implementable events to delegates within AudioEnemySubsystem that update WWise dynamically

Screenshot of DynamicMusicSwitchContainer showcasing how states automatically select which music segment to play

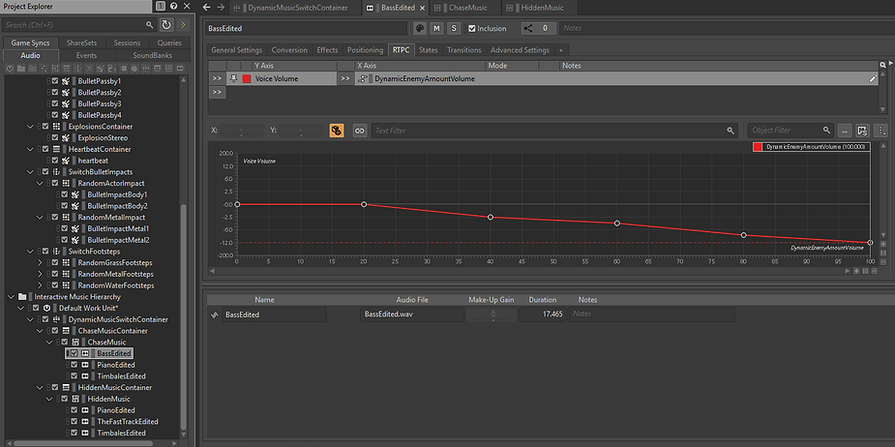

Screenshot of DynamicEnemyAmountVolume RTPC affecting volume of Piano instrumental track

Screenshot of DynamicEnemyAmountVolume RTPC affecting volume of the main Chase Music track

Projectile Pass-by Effect

The projectile pass-by effect implementation relies on the turret type enemies. As the player moves within range of the turrets, the turrets rotate towards the player and begin firing projectiles. These projectiles, when missing the player, perform quick "whizz" sound effects, that pan left or right, utilizing audio spatialization and attenuation directly within WWise itself. This helps increase the overall tension and player engagement, as it directly relates to the player's action of dodging the projectile, providing immediate feedback and enhancing game feel.

To ensure that the whizz sound effect only players when the projectile misses, a line cast is performed to predict any potential collisions with the player. This occurs when the projectile collides with a large collision box, BulletPassByBoxCollider, within the class AudioPlayerCharacter. I decided to implement this collider approach because I wanted to avoid performing constant line casts every frame to determine any collisions, as that could severely impact performance with large numbers of projectiles.

If the line cast predicts that the projectile will miss, then a WWise event is triggered to play a bullet whizz sound effect through the blueprint implementable event PlayProjectileWhizzEffect within the ProjectileAudioComponent class. This sound effect implements spatialization within WWise through the feature Listener Relative Routing, allowing it to pan dynamically to left or right depending on which side of the player the projectile passes. Attenuation is applied through a shared attenuation set, ensuring the sound becomes louder as the projectile passes closer to the player.

Overall, this feature provides an extra layer of feedback when being attacked by the turret enemies, enriching the gameplay experience by linking player actions (dodging projectiles) to immediate auditory responses. The whizz sound effects are effective at their intended purpose; however on occasion, the player may not dodge the projectile entirely, causing occasional misfires of the sound. To mitigate this, the predictive system could be refined by performing a line cast at a shorter distance to the player, decreasing the likelihood of such occurrences.

Screenshot of PredictProjectileCollision method determining if projectile will collide with the player.

Screenshot of Spatialization and Attenuation settings applied within WWise to the projectile whizz sound effect

Conclusion

While previously I had some professional experience utilizing the WWise profiler to debug event firing issues and provide audio hooks within gameplay systems, I had not yet implemented gameplay systems explicitly with WWise integration in mind. As such, this module was particularly interesting to me as I learned a significant amount of fundamental audio programming knowledge while having the freedom to implement my own audio programming techniques within a small game environment.

During development of the project, I was able to utilize several fundamental audio programming concepts within WWise such as RTPCs, events, spatialization and attenuation, alongside audio bus manipulation for the heartbeat implementation and reverberation techniques.

Overall, my implementation of dynamic music within WWise demonstrates how linking gameplay mechanics to dynamic audio can enhance player immersion and engagement, creating a more exciting and enjoyable gameplay experience.